Indian government sets indicative biofuels target: 20% by 2017

The new rules include a provision that discourages imports of biofuels and instead stimulates the creation of plantations in India as way to boost employment opportunties amongst the rural poor. The policy-making and implementation process includes input from a wide range of stakeholders, including representatives of India's lowest government level (the Panchayat Raj, i.e. the village).

India currently blends all gasoline with 5% ethanol (E5), and pilot projects are on to check the viability of biodiesel based on non-edible oils. The percentage of ethanol in petrol is to double from next month onwards (E10).

The Indian cabinet has now approved the implementation of a new National Biofuel Policy that has set an indicative target of blending 20 per cent ethanol in petrol and 20 per cent biodiesel from non-edible oil (e.g. from jatropha) in diesel, by 2017.

The policy calls for scrapping taxes and duties on biodiesel, and 'declared goods status' will be conferred on biodiesel and bioethanol. The 'declared goods status' means that the two fuels will be taxed at a uniform central sales tax or VAT rate rather than at the varied sales tax rates prevalent in India's states. (Oil firms currently buy ethanol at a fixed price of Rs 21.50 per litre [€0.33/liter - US$3.78/gallon], but non-edible oil for biodiesel is purchased at a price linked to prevailing diesel prices.)

Instead of setting up a National Bio-Fuel Development Board, as had been recommended by a Group of Ministers headed by Agriculture Minister Sharad Pawar, the Cabinet constituted a new National Biofuel Coordination Committee headed by the Prime Minister.

Before the biofuels are blended with the fossil fuels, they should go through a series of protocols and certifications, for which the industry and oil marketing companies (OMCs) should jointly set up an appropriate mechanism and the required facilities.

Imports of free fatty acids are prohibited and no import of duty rebate would be provided, as it hinders promotion of indigenous plantations of non-edible oil seeds. The Indian government sees the establishment of local plantations as a way to generate employment opportunities in rural areas.

While biodiesel plantations on community or government wastelands are encouraged, the government now discourages the establishment of plantations on fertile or irrigated land areas:

energy :: sustainability :: biomass :: bioenergy :: biofuels :: ethanol :: biodiesel :: rural employment :: poverty alleviation :: energy independence :: India ::

energy :: sustainability :: biomass :: bioenergy :: biofuels :: ethanol :: biodiesel :: rural employment :: poverty alleviation :: energy independence :: India :: The cabinet also approved the creation of a National Biofuel Coordination Committee which would be chaired by the Prime Minister and which will seven member ministers, while also giving its nod for setting up Biofuel Steering Committee.

The Steering Committee would be chaired by the Cabinet Secretary which, along with the National Biofuel Coordination Committee would be serviced by the Ministry of New and Renewable Energy. The Panchayati Raj (a decentralized government body representing the village level) would also be included as member in the Steering Committee.

A sub-committee, comprising the Department of Biotechnology and the Ministries of Agriculture, New and Renewable Energy and that of Rural Development under the Steering Committee would aid research on biofuels.

A minimum support price (MSP) for oil seeds will be determined and ensured with provisions for its periodic revision to provide a fair price to farmers, which would be looked into by the Steering Committee.

A Statutory Minimum Price (SMP) mechanism similar to that currently operating for sugarcane will be examined and possibly extended to the oil seeds processing industry which will produce the feedstock for biodiesel.

Article continues

--------------

--------------

Mongabay, a leading resource for news and perspectives on environmental and conservation issues related to the tropics, has launched Tropical Conservation Science - a new, open access academic e-journal. It will cover a wide variety of scientific and social studies on tropical ecosystems, their biodiversity and the threats posed to them.

Mongabay, a leading resource for news and perspectives on environmental and conservation issues related to the tropics, has launched Tropical Conservation Science - a new, open access academic e-journal. It will cover a wide variety of scientific and social studies on tropical ecosystems, their biodiversity and the threats posed to them.

Plant genetic diversity is crucial for food security and for fighting poverty. Nevertheless, crop plant varieties are disappearing fast, and access to genetic resources is increasingly restricted by commercial interests. A new book by FNI Senior Research Fellow Regine Andersen makes the first comprehensive analysis of how international agreements affect the management of crop genetic resources in developing countries, revealing that the interaction of the agreements has produced largely negative impacts, despite good intentions. The book, titled

Plant genetic diversity is crucial for food security and for fighting poverty. Nevertheless, crop plant varieties are disappearing fast, and access to genetic resources is increasingly restricted by commercial interests. A new book by FNI Senior Research Fellow Regine Andersen makes the first comprehensive analysis of how international agreements affect the management of crop genetic resources in developing countries, revealing that the interaction of the agreements has produced largely negative impacts, despite good intentions. The book, titled

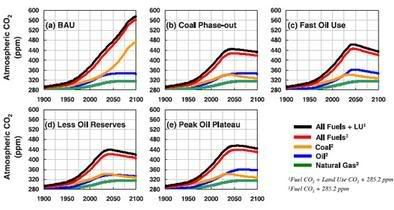

Researchers from the

Researchers from the  Dr Rajendra Pachauri, Chair of the Intergovernmental Panel on Climate Change, and joint winner of the 2007 Nobel Peace Prize, will present the case that eating less meat can help us fight climate change in an efficient way. Pachauri will deliver the message tomorrow when he presents the Peter Roberts Memorial Lecture titled "Global Warning: the impact of meat production & consumption on climate change", at a conference organised by Compassion in World Farming (CIWF), an animal welfare organisation.

Dr Rajendra Pachauri, Chair of the Intergovernmental Panel on Climate Change, and joint winner of the 2007 Nobel Peace Prize, will present the case that eating less meat can help us fight climate change in an efficient way. Pachauri will deliver the message tomorrow when he presents the Peter Roberts Memorial Lecture titled "Global Warning: the impact of meat production & consumption on climate change", at a conference organised by Compassion in World Farming (CIWF), an animal welfare organisation.

Saturday, September 13, 2008

Anaerobic digestion of livestock manure – an attractive option for renewable power

Salman has been active in the field of renewable energy for the past few years. His areas of expertise include biomass utilization, waste-to-energy conversion and sustainable development. After obtaining his Masters degree in Chemical Engineering in 2004, he has been involved in industrial research on biomass-to-bioenergy conversion processes in different waste sectors. He has been instrumental in the implementation and successful operation of 1MW biogas plant based on animal manure in Punjab (India).

The generation and disposal of organic waste without adequate treatment result in significant environmental pollution. Besides health concerns for the people in the vicinity of disposal sites, degradation of waste leads to uncontrolled release of greenhouse gases (GHGs) into the atmosphere.

Conventional means, like aeration, is energy intensive, expensive and also generates a significant quantity of biological sludge. In this context, anaerobic digestion offers potential energy savings and is a more stable process for medium and high strength organic effluents. Waste-to-Energy (WTE) plants, based on anaerobic digestion of biomass, are highly efficient in harnessing the untapped renewable energy potential of organic waste by converting the biodegradable fraction of the waste into high calorific gases.

Apart from treating the wastewater, the methane produced from the biogas facilities can be recovered, with relative ease, for electricity generation and industrial/domestic heating.

Anaerobic digestion plants not only decrease GHGs emission but also reduce dependence on fossil fuels for energy requirements. The anaerobic process has several advantages over other methods of waste treatment. Most significantly, it is able to accommodate relatively high rates of organic loading. With increasing use of anaerobic technology for treating various process streams, it is expected that industries would become more economically competitive because of their more judicious use of natural resources. Therefore, anaerobic digestion technology is almost certainly assured of increased usage in the future.

Feedstocks

A wide range of feedstock is available for anaerobic digesters. In addition to MSW, large quantity of waste, in both solid and liquid forms, is generated by the industrial sector like breweries, sugar mills, distilleries, food-processing industries, tanneries, and paper and pulp industries. Out of the total pollution contributed by industrial sub-sectors, nearly 40% of the total organic pollution is contributed by the food products industry alone.

Food products and agro-based industries together contribute 65% to 70% of the total industrial wastewater in terms of organic load. Poultry waste has the highest per tonne energy potential of electricity per tonne but livestock have the greatest potential for energy generation in the agricultural sector.

Most small-scale units such as tanneries, textile bleaching and dying, dairy, slaughterhouses cannot afford effluent treatment plants of their own because of economies of scale in pollution abatement. Recycling/recovery/re-use of products from the wastes of such small-scale units by adopting suitable technology could be a viable proposition. Generation of energy using anaerobic digestion process has proved to be economically attractive in many such cases.

Anaerobic digestion of livestock Manure – a case study

The livestock industry is an important contributor to the economy of any country. More than one billion tons of manure is produced annually by livestock in the United States. Animal manure is a valuable source of nutrients and renewable energy.

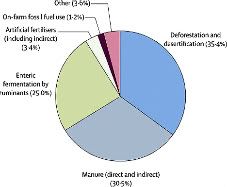

However, most of the manure is collected in lagoons or left to decompose in the open which pose a significant environmental hazard. The air pollutants emitted from manure include methane, nitrous oxide, ammonia, hydrogen sulfide, volatile organic compounds and particulate matter, which can cause serious environmental concerns and health problems:

Anaerobic digestion is a unique treatment solution for animal agriculture as it can deliver positive benefits related to multiple issues, including renewable energy, water pollution, and air emissions. Anaerobic digestion of animal manure is gaining popularity as a means to protect the environment and to recycle materials efficiently into the farming systems. Waste-to-Energy (WTE) plants, based on anaerobic digestion of biomass, are highly efficient in harnessing the untapped renewable energy potential of organic waste by converting the biodegradable fraction of the waste into high calorific gases. The biomass of important domestic animals has been listed in Fig 3 (click to enlarge).

In the past, livestock waste was recovered and sold as a fertilizer or simply spread onto agricultural land. The introduction of tighter environmental controls on odour and water pollution means that some form of waste management is necessary, which provides further incentives for biomass-to-energy conversion.

Important factors to consider

The main factors that influence biogas production from livestock manure are pH and temperature of the feedstock. It is well established that a biogas plant works optimally at neutral pH level and mesophilic temperature of around 35o C. Carbon-nitrogen ratio of the feed material is also an important factor and should be in the range of 20:1 to 30:1.

Animal manure has a carbon - nitrogen ratio of 25:1 and is considered ideal for maximum gas production. Solid concentration in the feed material is also crucial to ensure sufficient gas production, as well as easy mixing and handling. Hydraulic retention time (HRT) is the most important factor in determining the volume of the digester which in turn determines the cost of the plant; the larger the retention period, higher the construction cost.

An emerging technological advance in anaerobic digestion that may lead to increased biogas yields is the use of ultrasound to increase volatile solids conversion. This process disintegrates solids in the influent, which increases surface area and, in turn, allows for efficient digestion of biodegradable waste.

Process description of WTE facility based on livestock manure

The layout of a typical biogas facility using livestock manure as raw material is shown in Fig 4 (click to enlarge). The fresh animal manure is stored in a collection tank before its processing to the homogenization tank which is equipped with a mixer to facilitate homogenization of the waste stream. The uniformly mixed waste is passed through a macerator to obtain uniform particle size of 5-10 mm and pumped into suitable-capacity anaerobic digesters where stabilization of organic waste takes place.

Biogas contains a significant amount of hydrogen sulfide (H2S) gas which needs to be stripped off due to its highly corrosive nature. The removal of H2S takes place in a biological desulphurization unit in which a limited quantity of air is added to biogas in the presence of specialized aerobic bacteria which oxidizes H2S into elemental sulfur.

Gas is dried and vented into a CHP unit to a generator to produce electricity and heat. The size of the CHP system depends on the amount of biogas produced daily. The digested substrate is passed through screw presses for dewatering and then subjected to solar drying and conditioning to give high-quality organic fertilizer. The press water is treated in an effluent treatment plant based on activated sludge process which consists of an aeration tank and a secondary clarifier. The treated wastewater is recycled to meet in-house plant requirements. A chemical laboratory is necessary to continuously monitor important environmental parameters such as BOD, COD, VFA, pH, ammonia, C:N ratio at different locations for efficient and proper functioning of the process.

The continuous monitoring of the biogas plant is achieved by using a remote control system such as Supervisory Control and Data Acquisition (SCADA) system. This remote system facilitates immediate feedback and adjustment, which can result in energy savings.

Utilization of biogas and digestate

An anaerobic digestion plant produces biogas as well as digestate which can be further utilized to produce secondary outputs. Biogas can be used for producing electricity and heat, as a natural gas substitute and also a transportation fuel. A combined heat and power plant system (CHP) not only generates power but also produces heat for in-house requirements to maintain desired temperature level in the digester during cold season.

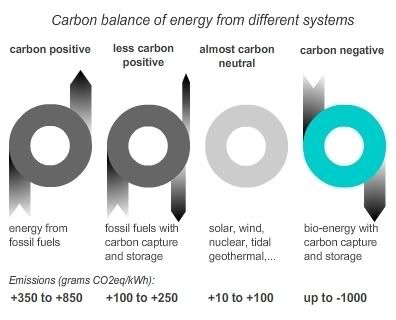

CHP systems cover a range of technologies but indicative energy outputs per m3 of biogas are approximately 1.7 kWh electricity and 2.5kWh heat. The combined production of electricity and heat is highly desirable because it displaces non-renewable energy demand elsewhere and therefore reduces the amount of carbon dioxide released into the atmosphere.

In Sweden, the compressed biogas is used as a transportation fuel for cars and buses. Biogas can also be upgraded and used in gas supply networks. The use of biogas in solid oxide fuel cells is being researched.

The surplus heat energy generated may be utilized through a district heating network. Thus, there is potential scope for biogas facilities in the proximity of new housing and development areas, particularly if the waste management system could utilise kitchen and green waste from the housing as a supplement to other feed stock.

Digestate can be further processed to produce liquor and a fibrous material. The fiber, which can be processed into compost, is a bulky material with low levels of nutrients and can be used as a soil conditioner or a low level fertilizer. A high proportion of the nutrients remain in the liquor, which can be used as a liquid fertilizer.

Conclusions

Anaerobic digestion of biomass offer two important benefits of environmentally safe waste management and disposal, as well as the generation of clean electric power. The growing use of digestion technology as a method to dispose off livestock manure has greatly reduced its environmental and economic impacts.

Biomass-to-biogas transformation mitigates GHGs emission and harness the untapped potential of a variety of organic waste. Anaerobic digestion technology affords greater water quality benefits than standard slurry storage due to lower pollution potential. It also provides additional benefits in terms of meeting the targets under the Kyoto Protocol and other environmental legislations.

The livestock industry is a vitally important contributor to the economy of any country, regardless of the degree of industrialization. Animal manure is a valuable source of renewable energy; additionally, it has soil enhancement properties. Anaerobic digestion is a unique treatment solution for animal agriculture as it can deliver positive benefits related to multiple issues, including renewable energy, water pollution, and air emissions.

Anaerobic digestion of animal manure is gaining popularity as a means to protect the environment and to produce clean energy. There is an urgent need to integrate the digester with manure management systems for effective implementation of the anaerobic digestion technology to address associated environmental concerns and to harness renewable energy potential of livestock.

Salman Zafar is currently working as an independent renewable energy advisor. His articles and studies appear on a regular basis in reputed journals and magazines, both in India and abroad, and on leading web-portals. He can be reached at [email protected].

Article continues

posted by Biopact team at 7:09 PM 3 comments links to this post